Top 10 Docker Vulnerability Scanners for 2023

Docker has revolutionized how developers work by offering a powerful platform for creating, shipping, and running container applications. It helps developers conquer the complexity of application

Serverless containers mark a notable evolution from traditional containerization. Traditional containers, being continuously active, can lead to resource wastage. Serverless containers, however, are ephemeral and operate on-demand. For developers, this means less time spent on server management and more on coding.

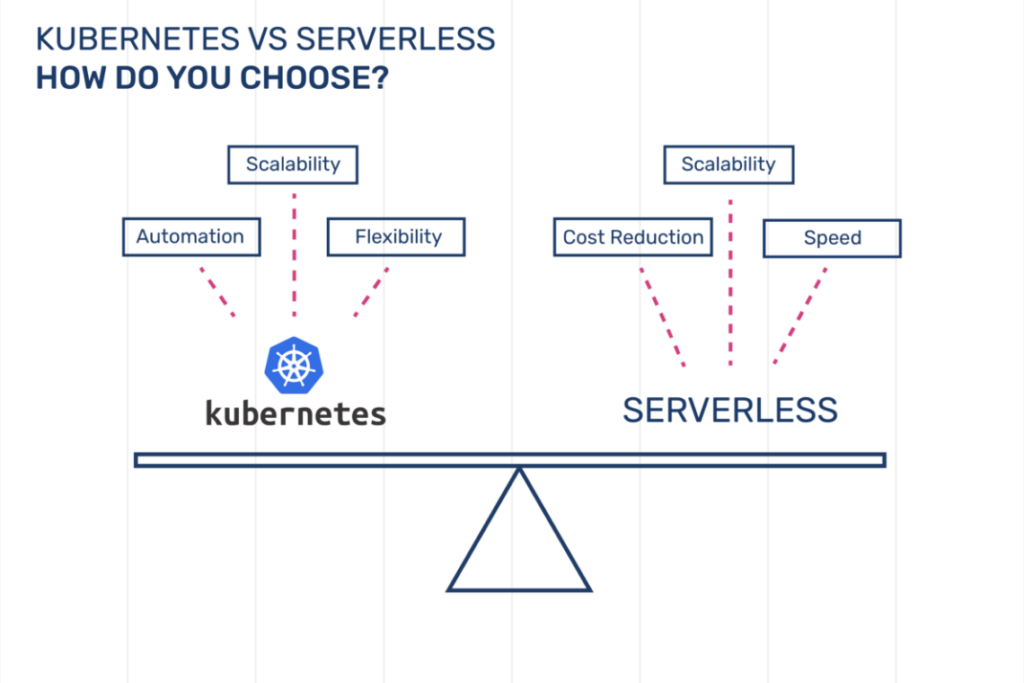

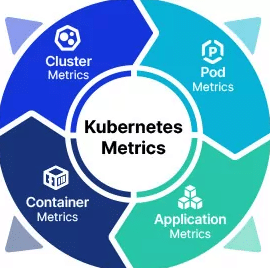

Kubernetes, or K8s, stands out in automating, scaling, and managing these containerized applications. It transforms groups of containers into manageable, logical units, enhancing application availability, facilitating smooth scaling, balancing network traffic, and ensuring self-healing by replacing failed containers. These features make Kubernetes an indispensable tool in modern cloud computing.

Serverless containers are ephemeral and operate on-demand. For developers, this means less time spent on server management and more on coding. A Report 2023 highlights this trend: 46% of container organizations now utilize serverless containers, up from 31% two years ago.

So how do you run serverless containers on Kubernetes? Here’s a quick overview guide.

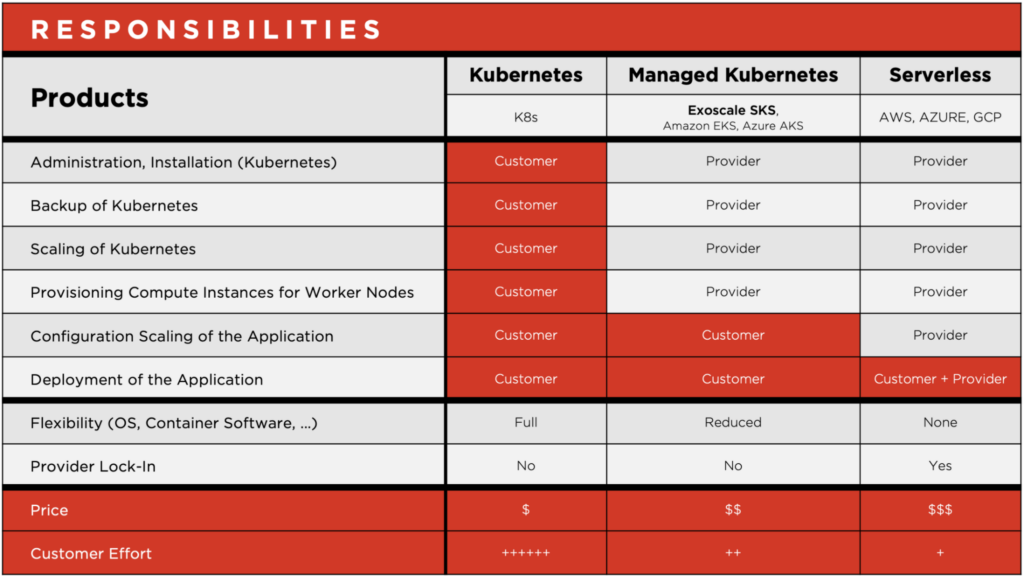

Choosing the right Kubernetes platform is essential for the effective and efficient deployment of serverless containers. This critical first step influences not only the ease of deployment but also the performance and security of your serverless applications.

Here is a quick list of popular Kubernetes Platforms and what they offer.

Once you’ve selected the ideal Kubernetes platform, the next critical step is to set up the Kubernetes environment. This step is fundamental in ensuring that your serverless containers operate efficiently and securely.

Here are some things to consider when setting up your Kubernetes environment.

When managing Kubernetes clusters, effectively using tools and dashboards can significantly enhance your experience. Start with the Kubernetes Dashboard, a user-friendly interface providing a visual overview of your cluster. It simplifies monitoring and troubleshooting, making cluster management more accessible.

Incorporating automation tools like Helm is also beneficial. Helm, known for its package management capabilities, aids in defining, installing, and upgrading even complex Kubernetes applications through Helm charts.

For performance insights, set up monitoring tools like Prometheus and Grafana. These tools are crucial for tracking the performance of your serverless containers and maintaining the overall health of the Kubernetes environment.

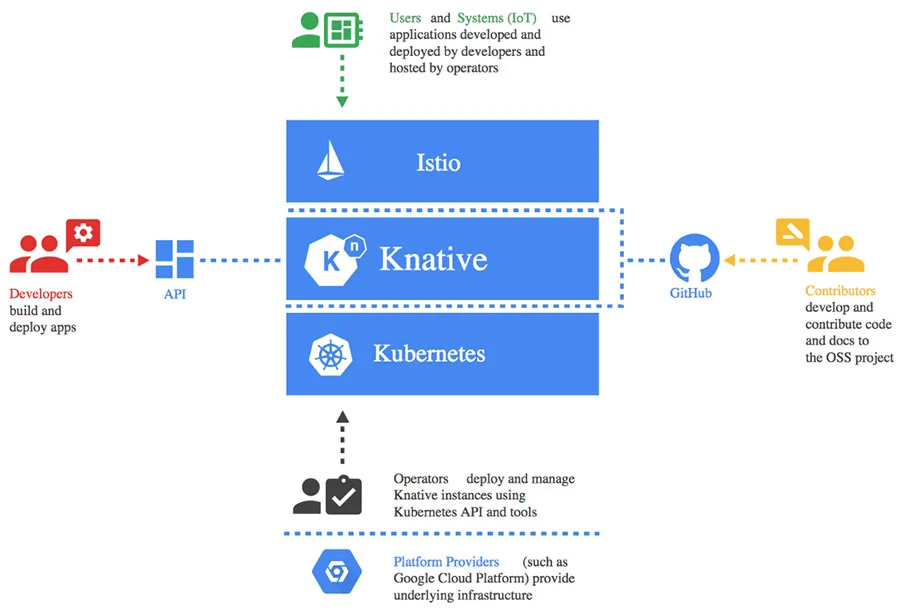

With your Kubernetes environment set up, the next step is to implement a serverless framework. Serverless frameworks play a pivotal role in simplifying the management and deployment of serverless containers within Kubernetes. They provide abstraction layers that handle much of the underlying infrastructure management, allowing you to focus on building and deploying your applications.

Serverless frameworks automate many aspects of deployment, such as resource provisioning and scaling, based on the workload requirements. They ensure that resources are used optimally, scaling up or down as needed, thereby aligning with the serverless principle of pay-for-what-you-use.

These frameworks leverage Kubernetes’ features for container orchestration, offering a seamless and cohesive environment for running serverless applications.

After setting up your Kubernetes environment and choosing a serverless framework, the next critical step is preparing your applications for serverless deployment. This step involves containerizing your applications, a process that must be carefully managed to ensure efficiency, speed, and security.

Serverless architectures thrive on stateless applications. Design your applications such that each instance can be quickly created, destroyed, and replaced without persisting data in the container.

Break down your application into microservices or functions that can run independently. This approach enhances scalability and eases management.

Deploying serverless containers to Kubernetes is a critical phase where your preparation and configuration come into play.

A Kubernetes deployment manifest is a YAML file that defines how your application should run in the cluster. For serverless containers, this manifest will specify the container image, the number of replicas, and other configuration details.

In your manifest, define each serverless function or service, including the path and the container image it should use. Be clear and precise in these definitions to ensure smooth deployment and operation.

Store and manage your manifests with version control. This approach allows you to track changes and rollback if necessary, ensuring stability in your deployments.

In your deployment manifest, define resource requests and limits for each container. Requests guarantee that a certain amount of CPU and memory is reserved for your container, while limits ensure that a container doesn’t exceed a specified resource quota.

Carefully balance these settings to optimize for performance and cost. Setting them too low might lead to poor performance, while too high settings can lead to unnecessary expenses.

Readiness probes determine when a container is ready to start accepting traffic. A failed readiness probe will remove the pod from service endpoints, ensuring that traffic is not sent to containers that are not ready.

Liveness probes help Kubernetes understand if a container is alive or dead. If a container is unresponsive, Kubernetes can restart it automatically, ensuring high availability and reliability.

Configure these probes in your deployment manifests. You can use HTTP requests, TCP socket checks, or custom commands as probes, depending on your application’s requirements.

Automation in deployment and scaling is a cornerstone of efficient and resilient serverless container management on Kubernetes. By leveraging Kubernetes’ native capabilities and integrating CI/CD pipelines, you can achieve a highly dynamic and responsive serverless environment.

ReplicaSets in Kubernetes ensure that a specified number of pod replicas are running at any given time. They are crucial for maintaining the desired state and availability of your applications.

HPA automatically adjusts the number of pod replicas based on observed CPU utilization or other select metrics. This capability is essential for serverless environments, where workload can vary dramatically, requiring the system to scale up or down efficiently.

Automating deployment and scaling in Kubernetes not only streamlines your operations but also ensures your serverless containers are always running optimally, adjusting to workload fluctuations in real-time. With tools like HPA, CI/CD pipelines, event-driven scaling, and Spectral, you create a robust, secure, and highly efficient serverless environment, capable of meeting the dynamic demands of modern cloud-native applications.

The final and ongoing step in managing serverless containers on Kubernetes involves vigilant monitoring and effective management. In a serverless environment, where resources dynamically scale and adjust, maintaining visibility into the performance and health of your applications is critical.

Continuous monitoring provides visibility into the performance and health of your serverless containers, enabling you to react swiftly to changes or issues.

Important metrics include container start-up times, function execution times, resource utilization, error rates, and throughput. Monitoring these metrics helps ensure your serverless containers are performing optimally.

Effective monitoring allows you to be proactive in managing your environment, and identifying potential issues before they impact your services.

Efficiently deploying serverless containers on Kubernetes is a multi-step process emphasizing scalability and security. It starts with choosing a Kubernetes platform, continues with setting up the environment, selecting a serverless framework, and ends with containerization for peak performance.

Including SpectralOps streamlines this further by enhancing CI/CD pipelines and security policies in a straightforward setup. Begin today for free.

Docker has revolutionized how developers work by offering a powerful platform for creating, shipping, and running container applications. It helps developers conquer the complexity of application

The cloud gives you agility, speed, and flexibility – but it also opens new doors for attackers. For DevOps teams, every line of code, every container,

There’s an age-old saying you can tell an engineer’s age by their preferred CI/CD (continuous integration and continuous delivery) tool. Depending on who you talk to,