Top 12 Open Source Code Security Tools

Open source software is everywhere. From your server to your fitness band. And it’s only becoming more common as over 90% of developers acknowledge using open

In today’s data-driven world, skilled developers are much sought out for their ability to build applications that serve the Big Data needs of organizations. The sheer size, complexity, and diversity of Big Data requires specialized applications and dedicated hardware to process and analyze this information with the aim of uncovering useful business insights that would otherwise be unavailable.

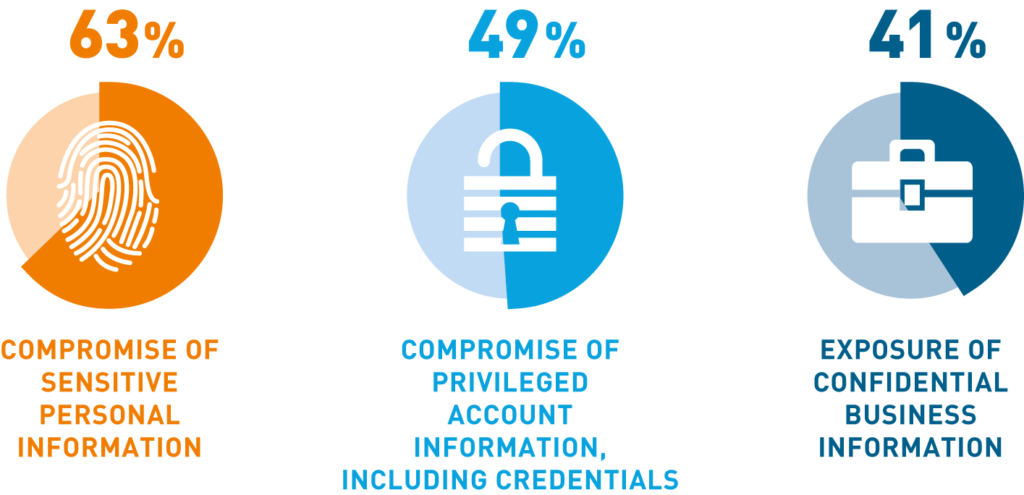

But with one analysis of data breaches from 2021 highlighting a total of 5 billion breached records, it’s critical for everyone involved in working on Big Data pipelines, from developers to DevOps engineers, to treat security with as much importance as the underlying business need they’re trying to serve.

Threat actors are likely to target any company running Big Data workloads due to the sheer volume of potentially sensitive data available for compromise. Read on to get a primer on Big Data security, including some key challenges to keep in mind and actionable best practices.

Big Data security refers to any measures used to protect data against malicious activity during the storage, processing, and analysis of datasets that are too large and complex to be handled by traditional database applications. Big Data can come in a mix of structured formats (organized into rows and columns containing numbers, dates, etc) or unstructured (social media data, PDF files, emails, images, etc). Estimates show that up to 90 percent of Big Data is unstructured, though.

Big Data’s power is that it often contains hidden insights that can improve business processes, drive innovation, or reveal unknown market trends. Since workloads to analyze this information often combine sensitive customer or proprietary data along with third-party data sources, proper data security is vital. Reputational damage and hefty financial losses are two major consequences of leaks and breaches of Big Data.

There are really three key stages to consider when trying to secure Big Data:

The types of security threats in these environments include improper access controls, Distributed Denial of Service (DDoS) attacks, endpoints generating false or malicious data, or vulnerabilities in libraries, frameworks, and applications used during Big Data workloads.

There are many challenges particular to Big Data security that emerge due to the architectural and environmental complexities involved. In a Big Data environment, you have an interplay of diverse hardware and technologies across a distributed computing environment. Some examples of challenges are:

These challenges are additions to, rather than replacements for, the usual challenges involved in securing any type of data.

With an appreciation for the challenges involved, let’s move on to some best practices for strengthening Big Data security.

Scalable encryption for data at rest and data in transit is critical to implement across a Big Data pipeline. Scalability is the key point here because you need to encrypt data across analytics toolsets and their output in addition to storage formats like NoSQL. The power of encryption is that even if a threat actor manages to intercept data packets or access sensitive files, a well-implemented encryption process makes the data unreadable.

Getting access control right provides robust protection against a range of Big Data security issues, such as insider threats and excess privileges. Role-based access can help to control access over the many layers of Big Data pipelines. For example, data analysts should have access to analytics tools like R, but they probably shouldn’t get access to tools used by Big Data developers, such as ETL software. The principle of least privileges is a good reference point for access control by limiting access to only the tools and data that are strictly necessary to perform a user’s tasks.

The inherently large storage volumes and processing power needed for Big Data workloads make it practical for most businesses to use cloud computing infrastructure and services for Big Data. But despite the attractiveness of cloud computing, exposed API keys, tokens, and misconfigurations are risks in the cloud worth taking seriously. What if someone leaves an AWS data lake in S3 completely open and accessible to anyone on the Internet? Mitigating these risks becomes easier with an automated scanning tool that works fast to scan public cloud assets for security blind spots.

In a complex Big Data ecosystem, the security of encryption requires a centralized key management approach to ensure effective policy-driven handling of encryption keys. Centralized key management also maintains control over key governance from creation through to key rotation. For businesses running Big Data workloads in the cloud, bring your own key (BYOK) is probably the best option that allows for centralized key management without handing over control of encryption key creation and management to a third-party cloud provider.

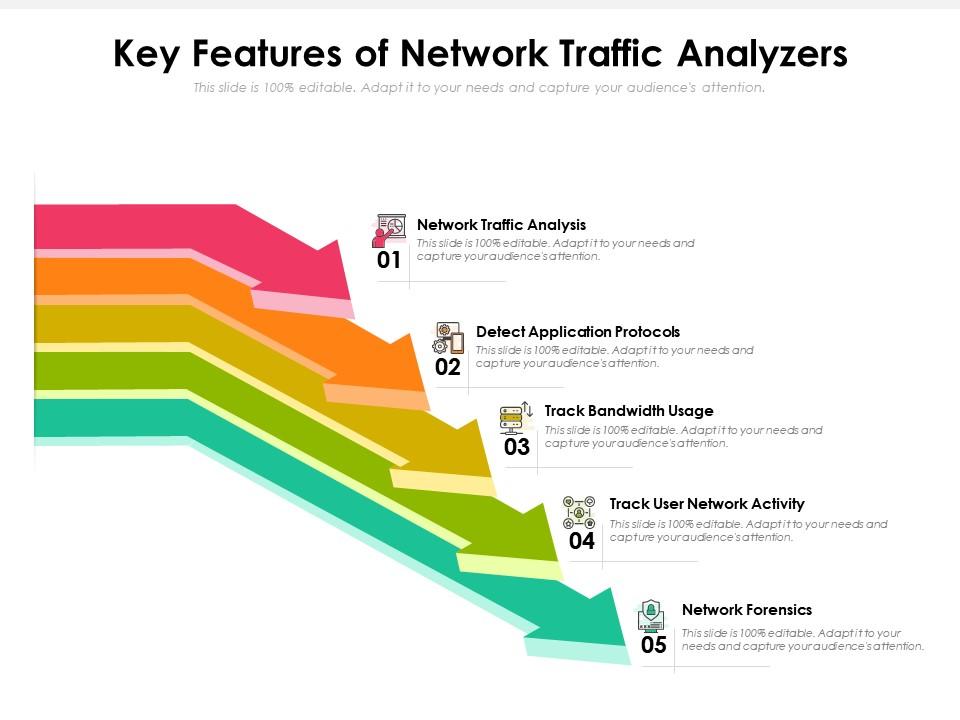

In a Big Data pipeline, there is constant traffic flow as data gets ingested from many different sources, including streaming data from social media platforms and data from user endpoints. Network traffic analysis provides visibility into network traffic and any potential anomalies such as malicious data from IoT devices or unencrypted communications protocols being used.

A 2021 report found that 98 percent of organizations feel vulnerable to insider attacks. In the context of Big Data, insider threats pose serious risks to the confidentiality of sensitive company information. A malicious insider with access to analytics reports and dashboards could reveal insights to competitors or even offer their login credentials for sale. A good place to start with insider threat detection is by examining logs for common business applications, such as RDP, VPN, Active Directory, and endpoints. These logs can reveal abnormalities worth investigating, such as unexpected data downloads or abnormal login times.

Threat hunting proactively searches for threats lurking undetected in your network. This process requires the skill set of an experienced cybersecurity analyst to formulate hypotheses about potential threats using intelligence from real-world attacks, threat campaigns, or correlating findings from different security tools. Ironically, Big Data can actually help improve threat hunting efforts by uncovering hidden insights in large volumes of security data. But as a way to improve Big Data security, threat hunting monitors datasets and infrastructure for artifacts that indicate a compromise of your Big Data environment.

Monitoring Big Data logs and tools for security purposes generates a lot of information which usually ends up in a Security information and event management (SIEM) solution. Given the enormous volumes of data often generated at high velocity in a Big Data environment, SIEM solutions are prone to false positives and analysts get inundated with too many alerts. Ideally, some sort of incident response tool can provide context into security threats that enable faster, more efficient incident investigation.

User behavior analytics goes a step further than insider threat detection by providing a dedicated toolset to monitor the behavior of users on the systems they interact with. Typically, behavior analytics uses a scoring system to create a baseline of normal user, application, and device behaviors and then alerts you when there are deviations from these baselines. With user behavior analytics you can better detect insider threats and compromised user accounts that threaten the confidentiality, integrity, or availability of the assets within your Big Data environment.

The prospect of unauthorized data transfers keeps security leaders awake at night particularly if data exfiltration occurs in Big Data pipelines where enormous volumes of potentially sensitive assets can be copied. Detecting data exfiltration requires in-depth monitoring of outbound traffic, IP addresses, and traffic. Preventing exfiltration in the first place comes from tools that find harmful security errors in code and misconfigurations along with data loss prevention and next-gen firewalls. Another important aspect is educating and raising awareness within your organization.

Frameworks, libraries, software utilities, data ingestion, analytics tools, and custom applications — Big Data security starts at the code level. Harmful security errors in code can result in data leakage regardless of whether you’ve implemented the above well-established security best practices.

So, if you’re a developer or engineer tasked with working on your organization’s Big Data pipeline, you need a solution that scans proprietary, custom, and open-source code rapidly and accurately for exposed API keys, tokens, credentials, and misconfigurations across your environment. By starting with a secure codebase, the challenge of Big Data security becomes a lot less daunting.

Spectral provides data loss prevention through automated codebase scanning that covers the entire software development lifecycle. The tool works in minutes and can easily eliminate public blind spots across multiple data sources. Get your SpectralOps demo here.

Open source software is everywhere. From your server to your fitness band. And it’s only becoming more common as over 90% of developers acknowledge using open

It’s easy to think that our code is secure. Vulnerabilities or potential exploits are often the things we think about last. Most of the time, our

Part of the Spectral API Security Series Yelp.com is one of the most influential crowdsourcing sites for businesses. The company is worth just over one billion