Top 10 CI/CD Automation Tools

Software teams have focused on agility since the world embraced Mark Zuckerberg’s motto to “move fast and break things.” But many still lack the confidence or

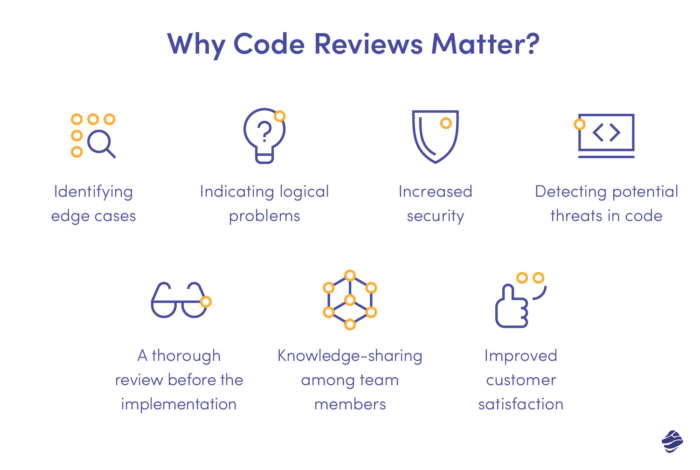

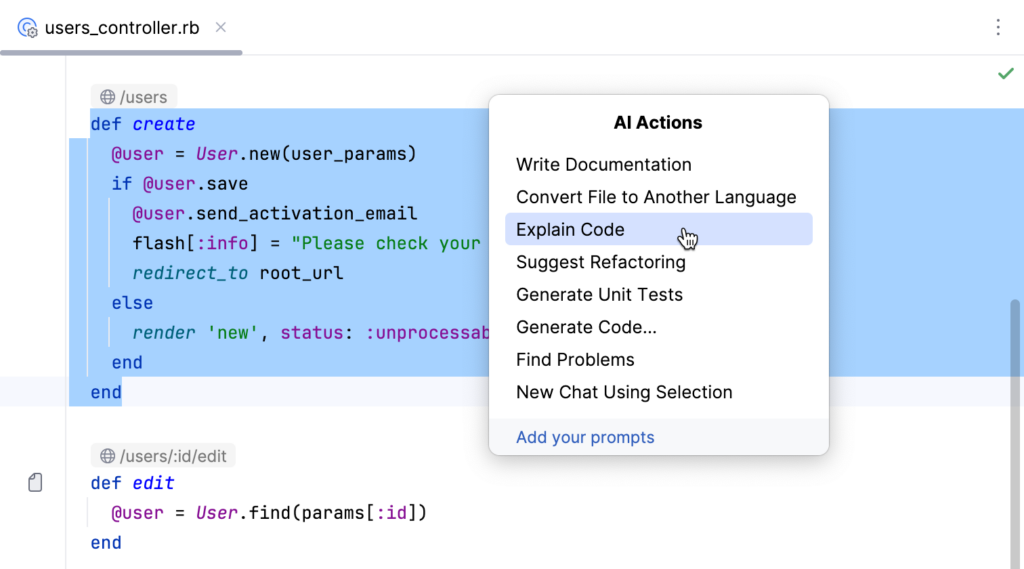

Imagine slashing the time spent on code reviews while catching more bugs and vulnerabilities than ever before. That’s the promise of AI-driven code review tools.

With 42% of large and enterprise organizations already integrating AI into their IT operations, the future of software development is here. These tools can swiftly detect syntax errors, enforce coding standards, and identify security threats, making them invaluable to development teams.

However, as powerful as AI is, it has its pitfalls. Over-reliance can lead to missed errors, false positives, and security risks. Developers can harness AI’s full potential by understanding these challenges, creating smarter, more efficient code review processes.

Artificial Intelligence (AI) is revolutionizing code review processes by swiftly analyzing extensive codebases that would take human reviewers significantly longer to assess. AI-driven tools can efficiently identify syntax errors, potential bugs, and security vulnerabilities, ensuring the code meets high-quality standards. These tools utilize machine learning algorithms to scan code for common mistakes and inconsistencies, significantly reducing the likelihood of overlooked issues.

Beyond error detection, AI can enforce coding styles and best practices, ensuring uniformity across different project parts. By adhering to standardized coding conventions, AI helps maintain readability and reduces the cognitive load on human developers. This streamlines collaboration and makes the code more maintainable in the long run.

Moreover, AI’s ability to identify security vulnerabilities is paramount in today’s cybersecurity landscape. Automated code reviews uncover security holes and potential exploits that manual reviews may miss. By integrating AI into the code review process, development teams can enhance the robustness and security of their software, ultimately delivering higher-quality products faster and with fewer resources.

While AI can potentially transform the code review process, relying too heavily on it can lead to significant oversights. AI, despite its advanced capabilities, is not infallible. It operates based on patterns and data it has been trained on, which means it can miss errors that require human intuition and contextual understanding to identify. For example, AI-generated code can introduce complex bugs or non-standard practices that are not immediately apparent, highlighting the need for thorough manual reviews.

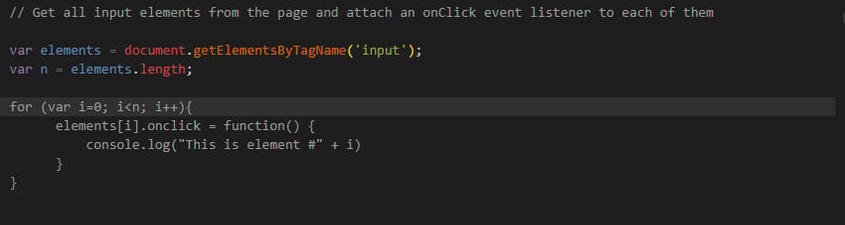

Here’s an example of an issue created by generated code.

While not technically incorrect, the issue with the code is related to how JavaScript closures work within the loop. Specifically, the value of i inside the onclick function will be the final value after the loop completes, not the value at the time the function was created. This means that all the onclick functions will log the same number, which is n (the length of the elements) instead of the individual index.

To fix this, you can use an Immediately Invoked Function Expression (IIFE) to create a new scope for each iteration, capturing the current value of i. Here’s the corrected code:

var elements = document.getElementsByTagName('input');

var n = elements.length;

for (var i = 0; i < n; i++) {

(function(index) {

elements[index].onclick = function() {

console.log("This is element #" + index);

};

})(i);

}

Alternatively, you can use let instead of var, as let creates a block scope variable in ES6:

var elements = document.getElementsByTagName('input');

var n = elements.length;

for (let i = 0; i < n; i++) {

elements[i].onclick = function() {

console.log("This is element #" + i);

};

}

Human oversight and critical thinking are essential to mitigating these risks. Developers should view AI as a tool to assist, not replace, their expertise. By working alongside AI, human reviewers can catch nuanced issues that AI might overlook. For example, AI might fail to understand the context in which a particular code operates, such as industry-specific regulations or company-specific coding standards. Additionally, certain bugs might only manifest under specific conditions that AI must still be trained to recognize.

It’s also important to consider the evolving nature of software development. New coding languages, frameworks, and paradigms continuously emerge, and AI models may lag in adapting to these changes. Human reviewers can bridge this gap by providing up-to-date knowledge and experience. Even though AI can significantly improve the effectiveness and thoroughness of code reviews, our human judgment should still be used for code review in addition to AI to guarantee thorough and correct evaluations.

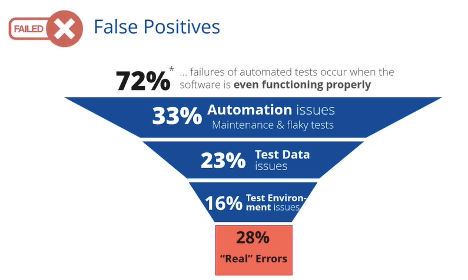

AI-driven code reviews are not immune to errors, often resulting in false positives and negatives. False positives occur when AI flags the correct code as problematic. It can frustrate developers, wasting time and effort as they address non-issues. Over time, false positives can diminish trust in AI tools, causing developers to overlook genuine warnings, similar to the “boy who cried wolf” scenario.

Conversely, false negatives are instances where AI fails to identify actual issues in the code. In the case of AI-generated code, these false negatives can lead to security loopholes or performance issues that go undetected, which emphasizes the importance of multi-layered analysis.

Creating a false sense of security can be dangerous, leaving potential bugs and vulnerabilities undetected. For example, AI might miss intricate security flaws that require a deeper understanding of the system’s architecture or specific edge cases that only manifest under certain conditions.

Training developers to critically evaluate AI-generated suggestions is crucial, as it prevents them from unquestioningly accepting these suggestions.

Historical data trains AI models and may include inherent biases. If left unaddressed, these biases can result in unfair outcomes, such as code from certain developers or communities being disproportionately flagged. This perpetuates stereotypes and discourages diversity within development teams.

For example, if a particular demographic predominantly trains an AI model on the code, it may unfairly evaluate code from others who adhere to different coding conventions or styles. Higher false favorable rates for specific groups can create additional hurdles for already underrepresented developers in the tech industry, showcasing a clear bias.

Ensuring AI tools are fair and inclusive requires a multi-faceted approach. Diverse training datasets encompassing various coding styles and practices can help mitigate bias. Regular audits of AI outputs can also identify and address discriminatory patterns. Involving diverse developers in the training and evaluation process can also provide valuable perspectives that help create a more equitable tool.

AI code review tools often require access to a project’s source code, including sensitive information. If these tools are not secure, they pose significant risks, such as data breaches or unauthorized access to proprietary code. Companies dealing with sensitive data or intellectual property should be particularly concerned about this.

Choosing reputable AI vendors with solid security practices is crucial for mitigating these risks. Ensuring that AI-generated code is rigorously checked for potential vulnerabilities and compliance issues is essential to safeguarding your codebase from hidden threats. Vendors must provide transparent information about their security measures and adhere to industry standards and regulations. Implementing solid internal security practices to protect sensitive information is crucial. Data is encrypted, and access to AI tools is restricted.

In addition, it is essential to regularly conduct security assessments and audits of AI tools to identify and address potential vulnerabilities. By prioritizing security and privacy, organizations can confidently leverage AI for code reviews without compromising their sensitive data.

While powerful, AI often struggles to grasp the broader business logic or intent behind the code. It analyzes code based on patterns and rules but needs the contextual understanding that human developers possess. This limitation can lead to incorrect suggestions or missed opportunities for optimization.

For example, AI might recommend changes that, while syntactically correct, conflict with the project’s overall design or business objectives. It also fails to recognize the importance of certain critical code sections for future scalability or integration with other systems.

It is crucial to maintain a collaborative approach where AI complements human expertise to avoid this. Developers must review AI suggestions, considering the project’s goals and architecture. Training them with domain-specific knowledge enhances the contextual understanding of AI models. Integrating AI and human oversight in the code review process is crucial to emphasize the vital role of human developers’ nuanced judgment.

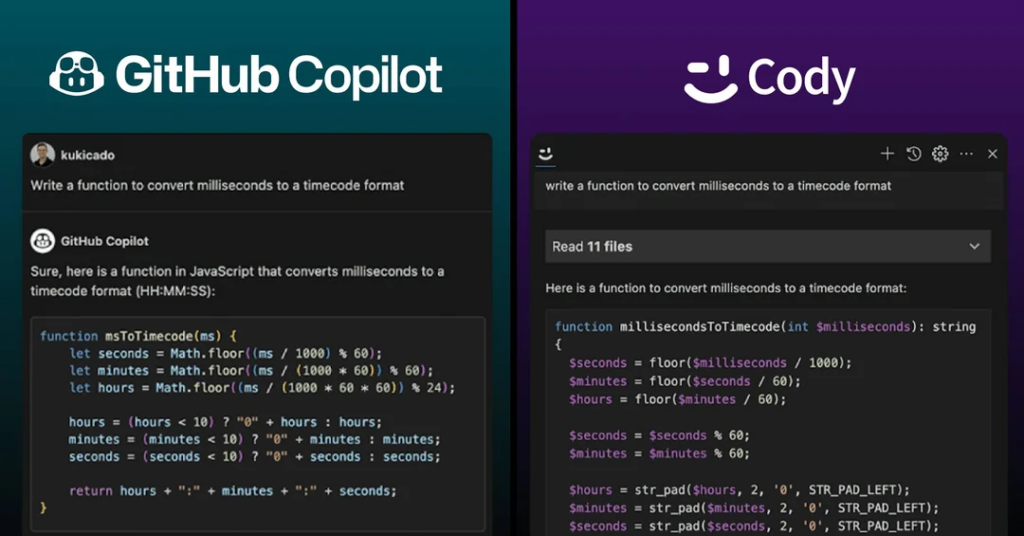

Combining AI with human expertise ensures a comprehensive code review process, leveraging both strengths. When selecting AI tools, consider the tool’s accuracy, adaptability, and integration capabilities with your existing workflows. Customizing AI tools through proper training and fine-tuning allows them to cater to specific project needs, improving their effectiveness. Continuously monitor and evaluate the performance of AI tools to identify areas for improvement and ensure they remain aligned with evolving project requirements and coding standards. By following these best practices, development teams can maximize the benefits of AI code review while mitigating potential pitfalls.

The code review process benefits significantly from incorporating AI, as it quickly identifies syntax errors and potential bugs, enforces coding standards, and uncovers security vulnerabilities. To fully harness AI’s potential, it is crucial to understand its limitations. Managing false positives, bias, and security risks is essential when relying on AI to avoid missed errors.

Developers can create a robust and effective code review system by combining AI with human oversight, selecting the right tools, and continuously training and evaluating AI models. This collaborative approach ensures that AI enhances human capabilities rather than replacing them, leading to higher-quality, more secure code.

Spectral offers advanced code security and governance solutions that integrate seamlessly into your development workflow. With Spectral, you can ensure that AI-generated and manually written code is thoroughly reviewed for vulnerabilities and compliance issues. Embrace the future of code review with Spectral and elevate your software development practices to new heights. Discover how Spectral can enhance your code review process today.

Software teams have focused on agility since the world embraced Mark Zuckerberg’s motto to “move fast and break things.” But many still lack the confidence or

Becoming and staying PCI compliant both take a lot of work. Developers are often already swamped with an endless list of tasks, and adding PCI compliance

Security is the biggest threat facing organizations that strive for faster software delivery. Organizations are witnessing increasing attacks due to application code gaps and security weaknesses.