Top 12 Open Source Code Security Tools

Open source software is everywhere. From your server to your fitness band. And it’s only becoming more common as over 90% of developers acknowledge using open

Many organizations build applications using microservices that often communicate with other distributed services. Ensuring that they remain secure requires a “secure token service,” secure communication protocols (mTLS), authentication, authorization, and data encryption. As a result, developers may implement a range of point security solutions that target these requirements, resulting in a complex security architecture that is challenging – if not impossible – to manage and maintain. Enter Service Mesh, a dedicated infrastructure layer recommended by many when securing an ephemeral application:

So it’s about time that we start implementing the Service Mesh Architecture to build secure applications at any scale. In this blog, we’re taking a deeper look into what that means.

It’s a dedicated low-latency infrastructure layer that provides reliable, fast, and secure communication among application services using APIs.

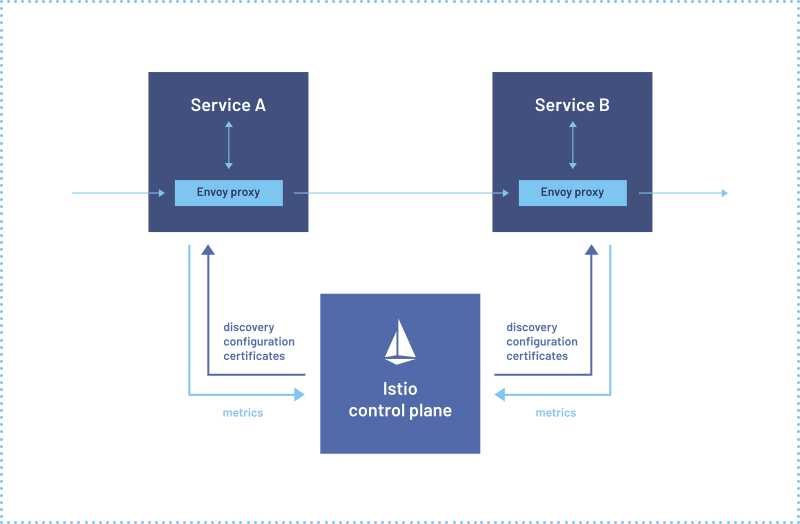

It’s important not to confuse it with a “mesh of services,” but rather to think of it as a “mesh of proxies” that provide service-to-service communication (using service discovery, encryption, authentication, authorization), observability (logging and monitoring), and resiliency (circuit breaking).

Although it sounds good on paper, the Service Mesh has pros and cons, and it’s crucial to understand these before implementing it in an existing microservice application.

It is an industry-recommended practice to ensure that microservices use the same security standard for inter-communication and external communication. Hence, all internal communication must be authenticated, authorized, and encrypted.

The Service Mesh helps govern these security standards for microservice communication by providing “mutual Transport Layer Security” (mTLS). Therefore, it acts as a complete solution for authentication services, thus enforcing security policies and encrypting traffic flow amongst services.

When developers have to implement the business logic and then write countless libraries to manage service-to-service communication, they have less time to focus on developing features. Service Mesh abstracts the service-to-service communication from the developer to allow them to focus on developing feature instead, improving productivity while leaving the operations team to manage the Service Mesh.

Having the capability to monitor distributed components is crucial. The Service Mesh allows developers to debug and optimize distributed components because it offers total visibility of all service layer communications.

As requests get proxied and verified before they are sent, there may be added latency in each request, which could slow down a production application.

It can increase the number of running runtime instances and might be costly.

A Service Mesh is most suitable for applications with millions of microservices that provide vital services to its end users. That is, you should use a Service Mesh if your application has highly demanding operational workflows. For example, companies like Netflix and Lyft rely solely on it to provide a robust experience to millions of users per hour.

If you wish to implement a Service Mesh for Kubernetes, consider using Lightrun. However, there are two alternative architectures that you may implement:

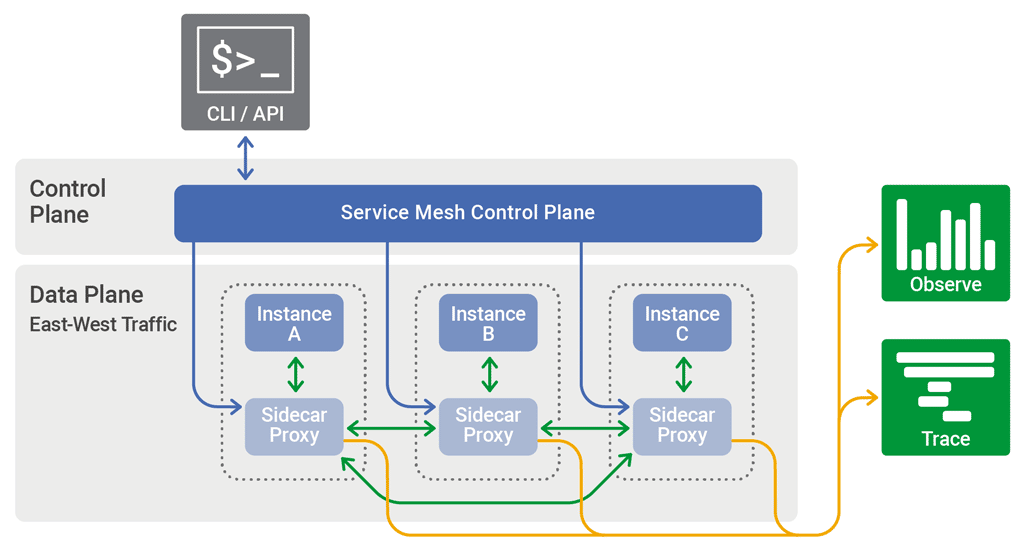

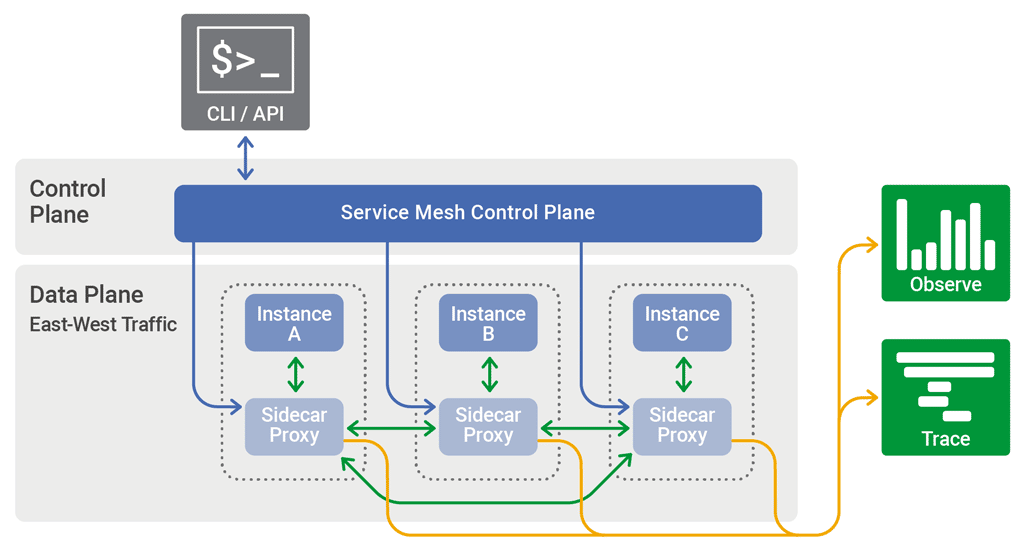

It has two components: Data Plane and Control Plane.

The data plane consists of proxies deployed to each microservice instance as a Sidecar.

A Sidecar is a design pattern that helps separate specific operations from a microservice, thus reducing its internal complexity. So, the networking operations get isolated to the Sidecar. Hence it acts as a proxy and manages all incoming and outgoing communications in each microservice.

Additionally, the Sidecar is responsible for more features such as:

The control plane offers a GUI or a CLI that the operations team can use to generate and deploy the configuration information needed to manage the behavior of the proxies in the data plane. It’s important to note that the data plane must connect and register using an API to obtain the configuration information to the control plane.

The diagram below illustrates an architectural diagram of a Service Mesh highlighting the discussed components:

It provides a more reliable intra-service communication, thus increasing the user experience for client applications. That’s why Netflix, Twitter, and Lyft leverage the Service Mesh Architecture. For example, Netflix uses microservices that communicate with other microservices to serve millions of client requests. Hence, Netflix requires:

The Sidecar deployed with each microservice enforces these requirements. Furthermore, Netflix and other companies with large-scale applications require the ability to deploy new versions of microservices with minimized downtime using Blue/Green deployments. They can use the load balancer of the Service Mesh to implement “Traffic Routing” to help route a certain number of user requests to the new version while the remaining requests get routed to the old version. This helps them to test the new version without affecting a large customer base. Once tested, they can fully route all traffic to the new version, thus enabling seamless Blue/Green deployments.

A monolithic application executes all function-to-function calls within the monolith and is secure. However, a microservice application uses HTTP to communicate with other services and generally has a higher need for authentication and authorization in order to secure the communication between these distributed services. Moreover, if it is not secure, a hacker may intercept the request and get access to sensitive data. That’s why microservices have a higher need for security. With a Service Mesh, the Sidecar proxy deployed with each microservice helps to satisfy authentication and authorization needs to control the traffic flow.

By default, it offers four forms of authentication:

Besides, it allows requests to get authorized in the principle of least privilege using a Role/Attribute-based Access Control. These security measures get enforced at the Sidecar and the Service Mesh and, as a result, the request gets validated in the Sidecar proxy and is forwarded to the microservice only if authorized to access it, ensuring high security.

Zero Trust Security is a security framework that suggests anything within a security parameter is untrusted and can compromise the network. Hence, it requires the resource to get authenticated, authorized, and continuously validated against a defined security configuration before gaining access to the required data.

Before the Service Mesh, achieving Zero-Trust Security with microservices was difficult as developers had to manually implement libraries capable of verifying certificates, authenticating, and authorizing the request.

However, using a Service Mesh helps you achieve Zero Trust Security with minimal complexity. It provides request authentication and authorization identities via a certificate authority that issues a certificate for each service.

The Service Mesh uses these identities to authenticate requests within the Service Mesh itself. Additionally, these certificates help developers to implement the principle of least privilege by defining authorization policies that specify the services that can communicate with each other.

Finally, it helps enforce mutual TLS (mTLS) to ensure that the data transmitted across services are encrypted while allowing decryption only if both microservices can verify their identity. Therefore, it helps to avoid man-in-the-middle attacks and can significantly improve your application security.

It’s clear by now that a Service Mesh helps to provide reliable and secure communication between microservices for applications with demanding workloads. In addition, it can help improve development productivity and observability and enforce a zero-trust network with minimal effort.

What’s left to say is that regardless of your infrastructure or whether you choose to implement it or not, enforcing security practices in your code and architecture is a must to protect your application from attacks. Watch this space as we share free content about the best practices that can help you do just that.

Open source software is everywhere. From your server to your fitness band. And it’s only becoming more common as over 90% of developers acknowledge using open

It’s easy to think that our code is secure. Vulnerabilities or potential exploits are often the things we think about last. Most of the time, our

Continuous integration and delivery are necessary in any production level software development process. CI/CD are more than just buzzwords. Rather, it is a fully-fledged methodology of