Top 10 CI/CD Automation Tools

Software teams have focused on agility since the world embraced Mark Zuckerberg’s motto to “move fast and break things.” But many still lack the confidence or

Developers don’t want to become obsolete, but we do want to become more efficient and effective at what we do. In addition to honing our skills and learning daily, we employ tools and techniques to and, let’s be honest here, make our work easier.

Recent years saw an increase in the availability and use of Code AI solutions like TabNine AI code autocomplete and others. These are tools that utilize machine learning to facilitate writing or maintaining code. Using advanced techniques from machine learning and AI, these tools attempt to provide intelligent suggestions or insights on code. To do that, they rely on large datasets of codebases to train the required models.

A recent addition to this category of tools is Microsoft’s GitHub Copilot, “your AI pair programmer.” Copilot belongs to the class of AI-based code autocomplete solutions powered by machine learning and trained on enormous code bases. The mass of data employed (supposedly) allows Copilot to contextualize content better than most other similar products. Thus, providing suggestions tailored for specific function / variable names, comments, or strings within the code.

The model used in the core of Copilot was developed by OpenAI (the same company that developed GPT-3, the language model that made headlines a little over a year ago) and trained on “billions of lines of public code.”

Alongside impressive demonstrations and positive reviews of the beta version, the days following Copilot’s announcement saw highlights of problematic behavior on the “AI programmer’s” side, including exposing secrets (such as credentials) mistakenly left in public repositories and copying licensed code verbatim.

Granted, people crafted situations that were intended to trigger such behaviors. However, they might well make a software company think twice before signing up for the service, fearing it would inadvertently make them infringe on some license or otherwise put them in a tight spot.

Some of these issues stem, at least partially, from all public Github code being used in the model’s training, regardless of license, organization, or sensitivity. Such issues highlight the difficulty in curating and using a dataset for training a “Code AI” model.

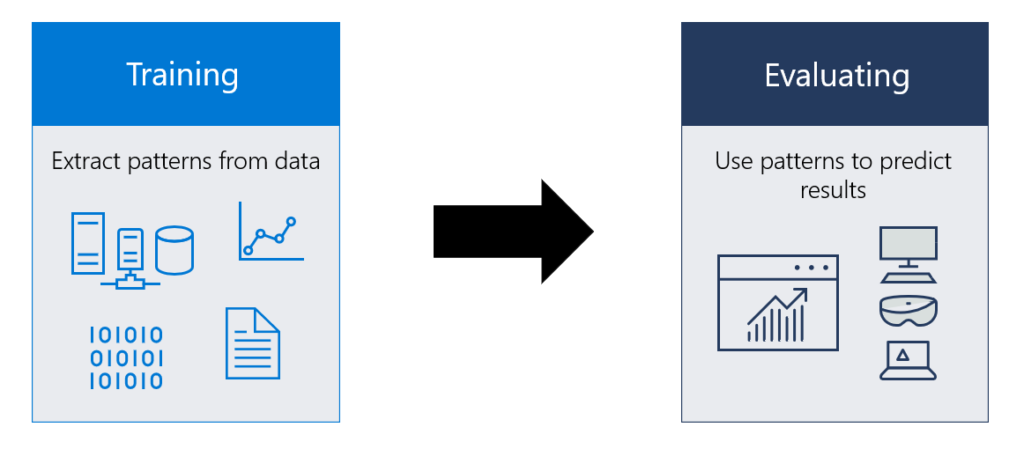

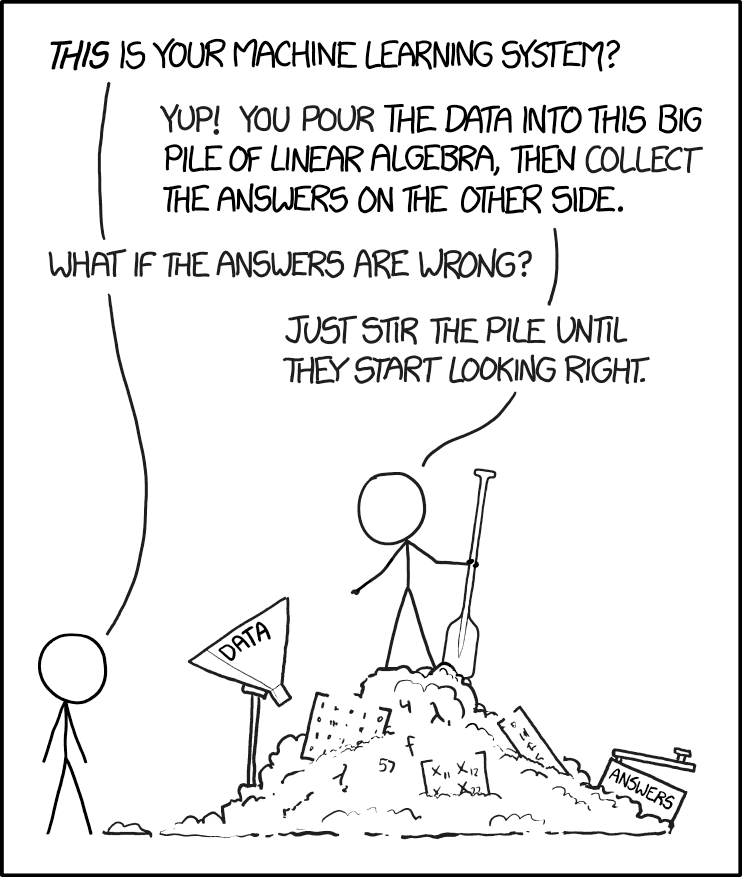

Machine learning models require data to “learn” from, and due to the complex nature of code, Code AI tools require a lot of such data. Fortunately, many code bases are publicly available all over the Internet, for example, on GitHub. Unfortunately, as highlighted by GitHub Copilot, naïvely using a uniform sample of publicly available code might not be the best idea.

In this post, we’ll examine the challenges as well as possible solutions to this conundrum.

For example, imagine that we’re interested in creating a language-agnostic tool that can classify whether a specific file contains server-side code. Approaching the task from the perspective of machine learning, we need to make some choices:

We can choose anything from simple models such as KNN to state-of-the-art deep learning, depending on the task’s difficulty and amount of data (code) that we have to train the model.

We can select any features from file metadata (extensions, neighbor file names, directory names, etc.) to features in the file’s contents.

To create a highly accurate classifier, we choose the deep learning approach – but such models require vast training datasets, so that’s where we focus our attention next.

A simple work plan for constructing the dataset would be:

While this might provide us with a dataset that will get us rolling, this training set is likely to suffer from certain biases; what’s worse, since the “test” part of the dataset will suffer from the same biases, we might not detect them until the model reaches production. These biases include:

Due to the initial list of terms used to label the files, our ground truth data might be biased towards languages for which the terms are more accurate or appropriate. To make the labeling as language-agnostic as possible, we could limit the term list to include only the most common terms, maintain a term list that equally represents all languages (as much as possible), and standardize the way we match against the term list to account for different coding styles.

Uniform sampling of repositories will provide us with the same distribution of programming languages present in the code hosting service. As the distribution of languages is long-tailed, many languages are likely to be un- or under-represented, resulting in a worse performance of the model on those languages.

Different languages have different propensities to writing server-side code, e.g., Java files probably contain much more server-side code than HTML. Therefore even if our dataset has a balanced representation of languages, each language might not have a proportional representation of labels. This might lead to the model learning over-simplistic behavior, e.g., constantly labeling python code as positive (containing server-side code) and JavaScript as negative due to the inherent language biases.

Some of these issues might be solved (at least partially) by tuning our search in the code hosting service, but tuned searches are usually not enough. To fully mitigate those issues, we might need to employ more advanced smart sampling.

Tuning our search, e.g., to actively sample different languages in a balanced manner, might still come short of the goal. One reason is that when filtering by repositories’ primary language, we will still get all the secondary languages from those repositories. This might tip the balance in favor of languages commonly used with others, such as HTML.

Some strategies we could employ to mitigate the issues mentioned above:

Using this strategy, we can over-sample positive samples from languages with a negative bias, and under-sample positive samples from languages with a positive bias. This will lead to a more balanced dataset but risks losing important information on languages with a positive bias and overfitting the oversampled samples from languages with a negative bias.

In symmetry with the positive sampling strategy, we could alternatively over- and under-sample negative samples based on the bias of each language.

This strategy combines the previous two, using a more moderate amount of over- and under-sampling of each class to achieve an unbiased dataset.

As confident as we are that we’ve created an unbiased dataset, it is best to monitor each of our metrics and KPIs both generally and per language. This might shed light on biases that we missed or other hidden issues.

As mentioned earlier, while we can automate some parts of the data labeling (e.g., using a list of terms to match against), it still requires some manual curation instead of preemptively labeling the entire dataset. Since this might be a very labor-intensive task, we could use active learning. This would entail tagging only a portion of the dataset and using that portion to train the model. Then, we use the model to infer label probabilities for the rest of the dataset.

Some of the (previously unseen) samples will be very similar to samples from the labeled training set, resulting in high confidence in the model’s classification. However, significantly different samples will be more difficult for the model, leading to the label probabilities being closer to the model’s decision boundary.

We can prioritize those samples for manual curation and labeling, as they will likely have the most significant impact on the model. This process will allow us to obtain a model with similar performance to the model we would have if we labeled the entire dataset, potentially only a fraction of the effort.

As with many machine learning tasks, collecting and labeling the dataset is an essential – and challenging – step, sometimes even more than training the model. Making sure to attend to the potential issues during the initial creation of the dataset can save a lot of time and trouble down the road.

Software teams have focused on agility since the world embraced Mark Zuckerberg’s motto to “move fast and break things.” But many still lack the confidence or

Becoming and staying PCI compliant both take a lot of work. Developers are often already swamped with an endless list of tasks, and adding PCI compliance

Security is the biggest threat facing organizations that strive for faster software delivery. Organizations are witnessing increasing attacks due to application code gaps and security weaknesses.